MTCNN

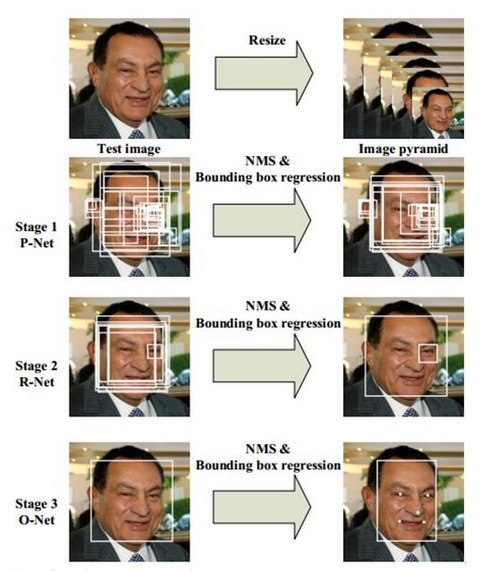

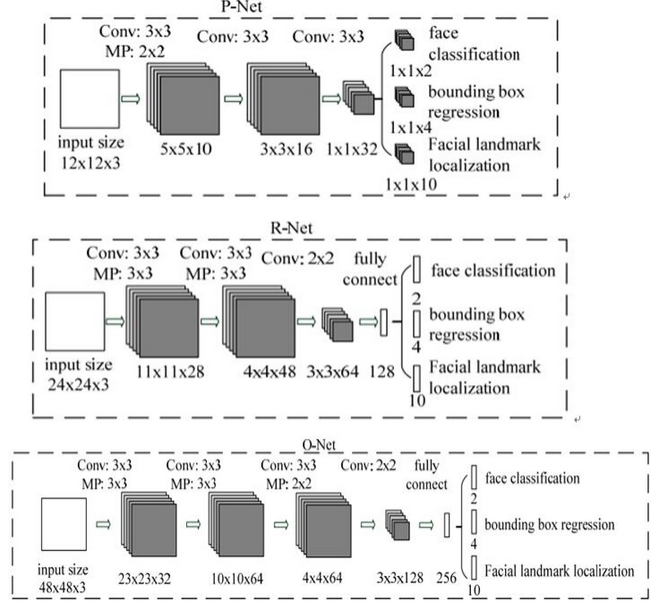

MTCNN算法由3个网络构成,分别是PNet,RNet以及ONet组成,其中PNet输出人脸位置和是人脸的概率,并且PNet是一个全卷积网络,在图像金字塔上不同尺度获得feature map每个pixel对应的人脸位置编码和人脸概率,然后通过阈值和NMS获得ROI人脸区域.第二个网络叫RNet主要对第一个网络获得的ROI区域进行refine,将第一个网络获得的所有ROI resize到24*24,重新分类获得所有ROI区域的人脸区域坐标和是人脸的概率。第三个网络叫ONet,对第二个CNN获得的人脸区域进行再次训练获得是否是人脸,人脸坐标以及五个特征点。

算法框架

测试图片

测试结果

以下项目时MTCNN的具体代码实现

项目地址:https://github.com/pangyupo/mxnet_mtcnn_face_detection

# coding: utf-8

# main.py

import mxnet as mx

from mtcnn_detector import MtcnnDetector

import cv2

import os

import time

detector = MtcnnDetector(model_folder='model', ctx=mx.cpu(0), num_worker = 4 , accurate_landmark = False)

img = cv2.imread('test.jpg')

for i in range(4):

t1 = time.time()

results = detector.detect_face(img)

print 'time: ',time.time() - t1

if results is not None:

total_boxes = results[0]

points = results[1]

draw = img.copy()

for b in total_boxes:

cv2.rectangle(draw, (int(b[0]), int(b[1])), (int(b[2]), int(b[3])), (100, 255, 0))

for p in points:

for i in range(5):

cv2.circle(draw, (p[i], p[i + 5]), 1, (0, 0, 255), 2)

cv2.imshow("detection result", draw)

cv2.waitKey(0)

path = os.path.join('resutl'+'.jpg')

cv2.imwrite(path,draw)

# --------------

# test on camera

# --------------

'''

camera = cv2.VideoCapture(0)

while True:

grab, frame = camera.read()

img = cv2.resize(frame, (320,180))

t1 = time.time()

results = detector.detect_face(img)

print 'time: ',time.time() - t1

if results is None:

continue

total_boxes = results[0]

points = results[1]

draw = img.copy()

for b in total_boxes:

cv2.rectangle(draw, (int(b[0]), int(b[1])), (int(b[2]), int(b[3])), (255, 255, 255))

for p in points:

for i in range(5):

cv2.circle(draw, (p[i], p[i + 5]), 1, (255, 0, 0), 2)

cv2.imshow("detection result", draw)

cv2.waitKey(30)

'''

# coding: utf-8

# mtcnn_detector.py

import os

import mxnet as mx

import numpy as np

import math

import cv2

from multiprocessing import Pool

from itertools import repeat

from itertools import izip

from helper import nms, adjust_input, generate_bbox, detect_first_stage_warpper

class MtcnnDetector(object):

"""

Joint Face Detection and Alignment using Multi-task Cascaded Convolutional Neural Networks

see https://github.com/kpzhang93/MTCNN_face_detection_alignment

this is a mxnet version

"""

def __init__(self,

model_folder='.',

minsize = 20,

threshold = [0.6, 0.7, 0.7],

factor = 0.709,

num_worker = 1,

accurate_landmark = False,

ctx=mx.gpu()):

"""

Initialize the detector

Parameters:

----------

model_folder : string

path for the models

minsize : float number

minimal face to detect

threshold : float number

detect threshold for 3 stages

factor: float number

scale factor for image pyramid

num_worker: int number

number of processes we use for first stage

accurate_landmark: bool

use accurate landmark localization or not

"""

self.num_worker = num_worker

self.accurate_landmark = accurate_landmark

# load 4 models from folder

models = ['det1', 'det2', 'det3','det4']

models = [ os.path.join(model_folder, f) for f in models]

self.PNets = []

for i in range(num_worker):

workner_net = mx.model.FeedForward.load(models[0], 1, ctx=ctx)

self.PNets.append(workner_net)

self.Pool = Pool(num_worker)

self.RNet = mx.model.FeedForward.load(models[1], 1, ctx=ctx)

self.ONet = mx.model.FeedForward.load(models[2], 1, ctx=ctx)

self.LNet = mx.model.FeedForward.load(models[3], 1, ctx=ctx)

self.minsize = float(minsize)

self.factor = float(factor)

self.threshold = threshold

def convert_to_square(self, bbox):

"""

convert bbox to square

Parameters:

----------

bbox: numpy array , shape n x 5

input bbox

Returns:

-------

square bbox

"""

square_bbox = bbox.copy()

h = bbox[:, 3] - bbox[:, 1] + 1

w = bbox[:, 2] - bbox[:, 0] + 1

max_side = np.maximum(h,w)

square_bbox[:, 0] = bbox[:, 0] + w*0.5 - max_side*0.5

square_bbox[:, 1] = bbox[:, 1] + h*0.5 - max_side*0.5

square_bbox[:, 2] = square_bbox[:, 0] + max_side - 1

square_bbox[:, 3] = square_bbox[:, 1] + max_side - 1

return square_bbox

def calibrate_box(self, bbox, reg):

"""

calibrate bboxes

Parameters:

----------

bbox: numpy array, shape n x 5

input bboxes

reg: numpy array, shape n x 4

bboxex adjustment

Returns:

-------

bboxes after refinement

"""

w = bbox[:, 2] - bbox[:, 0] + 1

w = np.expand_dims(w, 1)

h = bbox[:, 3] - bbox[:, 1] + 1

h = np.expand_dims(h, 1)

reg_m = np.hstack([w, h, w, h])

aug = reg_m * reg

bbox[:, 0:4] = bbox[:, 0:4] + aug

return bbox

def pad(self, bboxes, w, h):

"""

pad the the bboxes, alse restrict the size of it

Parameters:

----------

bboxes: numpy array, n x 5

input bboxes

w: float number

width of the input image

h: float number

height of the input image

Returns :

------s

dy, dx : numpy array, n x 1

start point of the bbox in target image

edy, edx : numpy array, n x 1

end point of the bbox in target image

y, x : numpy array, n x 1

start point of the bbox in original image

ex, ex : numpy array, n x 1

end point of the bbox in original image

tmph, tmpw: numpy array, n x 1

height and width of the bbox

"""

tmpw, tmph = bboxes[:, 2] - bboxes[:, 0] + 1, bboxes[:, 3] - bboxes[:, 1] + 1

num_box = bboxes.shape[0]

dx , dy= np.zeros((num_box, )), np.zeros((num_box, ))

edx, edy = tmpw.copy()-1, tmph.copy()-1

x, y, ex, ey = bboxes[:, 0], bboxes[:, 1], bboxes[:, 2], bboxes[:, 3]

tmp_index = np.where(ex > w-1)

edx[tmp_index] = tmpw[tmp_index] + w - 2 - ex[tmp_index]

ex[tmp_index] = w - 1

tmp_index = np.where(ey > h-1)

edy[tmp_index] = tmph[tmp_index] + h - 2 - ey[tmp_index]

ey[tmp_index] = h - 1

tmp_index = np.where(x < 0)

dx[tmp_index] = 0 - x[tmp_index]

x[tmp_index] = 0

tmp_index = np.where(y < 0)

dy[tmp_index] = 0 - y[tmp_index]

y[tmp_index] = 0

return_list = [dy, edy, dx, edx, y, ey, x, ex, tmpw, tmph]

return_list = [item.astype(np.int32) for item in return_list]

return return_list

def slice_index(self, number):

"""

slice the index into (n,n,m), m < n

Parameters:

----------

number: int number

number

"""

def chunks(l, n):

"""Yield successive n-sized chunks from l."""

for i in range(0, len(l), n):

yield l[i:i + n]

num_list = range(number)

return list(chunks(num_list, self.num_worker))

def detect_face(self, img):

"""

detect face over img

Parameters:

----------

img: numpy array, bgr order of shape (1, 3, n, m)

input image

Retures:

-------

bboxes: numpy array, n x 5 (x1,y2,x2,y2,score)

bboxes

points: numpy array, n x 10 (x1, x2 ... x5, y1, y2 ..y5)

landmarks

"""

# check input

MIN_DET_SIZE = 12

if img is None:

return None

# only works for color image

if len(img.shape) != 3:

return None

# detected boxes

total_boxes = []

height, width, _ = img.shape

minl = min( height, width)

# get all the valid scales

scales = []

m = MIN_DET_SIZE/self.minsize

minl *= m

factor_count = 0

while minl > MIN_DET_SIZE:

scales.append(m*self.factor**factor_count)

minl *= self.factor

factor_count += 1

#############################################

# first stage

#############################################

#for scale in scales:

# return_boxes = self.detect_first_stage(img, scale, 0)

# if return_boxes is not None:

# total_boxes.append(return_boxes)

sliced_index = self.slice_index(len(scales))

total_boxes = []

for batch in sliced_index:

local_boxes = self.Pool.map( detect_first_stage_warpper, \

izip(repeat(img), self.PNets[:len(batch)], [scales[i] for i in batch], repeat(self.threshold[0])) )

total_boxes.extend(local_boxes)

# remove the Nones

total_boxes = [ i for i in total_boxes if i is not None]

if len(total_boxes) == 0:

return None

total_boxes = np.vstack(total_boxes)

if total_boxes.size == 0:

return None

# merge the detection from first stage

pick = nms(total_boxes[:, 0:5], 0.7, 'Union')

total_boxes = total_boxes[pick]

bbw = total_boxes[:, 2] - total_boxes[:, 0] + 1

bbh = total_boxes[:, 3] - total_boxes[:, 1] + 1

# refine the bboxes

total_boxes = np.vstack([total_boxes[:, 0]+total_boxes[:, 5] * bbw,

total_boxes[:, 1]+total_boxes[:, 6] * bbh,

total_boxes[:, 2]+total_boxes[:, 7] * bbw,

total_boxes[:, 3]+total_boxes[:, 8] * bbh,

total_boxes[:, 4]

])

total_boxes = total_boxes.T

total_boxes = self.convert_to_square(total_boxes)

total_boxes[:, 0:4] = np.round(total_boxes[:, 0:4])

#############################################

# second stage

#############################################

num_box = total_boxes.shape[0]

# pad the bbox

[dy, edy, dx, edx, y, ey, x, ex, tmpw, tmph] = self.pad(total_boxes, width, height)

# (3, 24, 24) is the input shape for RNet

input_buf = np.zeros((num_box, 3, 24, 24), dtype=np.float32)

for i in range(num_box):

tmp = np.zeros((tmph[i], tmpw[i], 3), dtype=np.uint8)

tmp[dy[i]:edy[i]+1, dx[i]:edx[i]+1, :] = img[y[i]:ey[i]+1, x[i]:ex[i]+1, :]

input_buf[i, :, :, :] = adjust_input(cv2.resize(tmp, (24, 24)))

output = self.RNet.predict(input_buf)

# filter the total_boxes with threshold

passed = np.where(output[1][:, 1] > self.threshold[1])

total_boxes = total_boxes[passed]

if total_boxes.size == 0:

return None

total_boxes[:, 4] = output[1][passed, 1].reshape((-1,))

reg = output[0][passed]

# nms

pick = nms(total_boxes, 0.7, 'Union')

total_boxes = total_boxes[pick]

total_boxes = self.calibrate_box(total_boxes, reg[pick])

total_boxes = self.convert_to_square(total_boxes)

total_boxes[:, 0:4] = np.round(total_boxes[:, 0:4])

#############################################

# third stage

#############################################

num_box = total_boxes.shape[0]

# pad the bbox

[dy, edy, dx, edx, y, ey, x, ex, tmpw, tmph] = self.pad(total_boxes, width, height)

# (3, 48, 48) is the input shape for ONet

input_buf = np.zeros((num_box, 3, 48, 48), dtype=np.float32)

for i in range(num_box):

tmp = np.zeros((tmph[i], tmpw[i], 3), dtype=np.float32)

tmp[dy[i]:edy[i]+1, dx[i]:edx[i]+1, :] = img[y[i]:ey[i]+1, x[i]:ex[i]+1, :]

input_buf[i, :, :, :] = adjust_input(cv2.resize(tmp, (48, 48)))

output = self.ONet.predict(input_buf)

# filter the total_boxes with threshold

passed = np.where(output[2][:, 1] > self.threshold[2])

total_boxes = total_boxes[passed]

if total_boxes.size == 0:

return None

total_boxes[:, 4] = output[2][passed, 1].reshape((-1,))

reg = output[1][passed]

points = output[0][passed]

# compute landmark points

bbw = total_boxes[:, 2] - total_boxes[:, 0] + 1

bbh = total_boxes[:, 3] - total_boxes[:, 1] + 1

points[:, 0:5] = np.expand_dims(total_boxes[:, 0], 1) + np.expand_dims(bbw, 1) * points[:, 0:5]

points[:, 5:10] = np.expand_dims(total_boxes[:, 1], 1) + np.expand_dims(bbh, 1) * points[:, 5:10]

# nms

total_boxes = self.calibrate_box(total_boxes, reg)

pick = nms(total_boxes, 0.7, 'Min')

total_boxes = total_boxes[pick]

points = points[pick]

if not self.accurate_landmark:

return total_boxes, points

#############################################

# extended stage

#############################################

num_box = total_boxes.shape[0]

patchw = np.maximum(total_boxes[:, 2]-total_boxes[:, 0]+1, total_boxes[:, 3]-total_boxes[:, 1]+1)

patchw = np.round(patchw*0.25)

# make it even

patchw[np.where(np.mod(patchw,2) == 1)] += 1

input_buf = np.zeros((num_box, 15, 24, 24), dtype=np.float32)

for i in range(5):

x, y = points[:, i], points[:, i+5]

x, y = np.round(x-0.5*patchw), np.round(y-0.5*patchw)

[dy, edy, dx, edx, y, ey, x, ex, tmpw, tmph] = self.pad(np.vstack([x, y, x+patchw-1, y+patchw-1]).T,

width,

height)

for j in range(num_box):

tmpim = np.zeros((tmpw[j], tmpw[j], 3), dtype=np.float32)

tmpim[dy[j]:edy[j]+1, dx[j]:edx[j]+1, :] = img[y[j]:ey[j]+1, x[j]:ex[j]+1, :]

input_buf[j, i*3:i*3+3, :, :] = adjust_input(cv2.resize(tmpim, (24, 24)))

output = self.LNet.predict(input_buf)

pointx = np.zeros((num_box, 5))

pointy = np.zeros((num_box, 5))

for k in range(5):

# do not make a large movement

tmp_index = np.where(np.abs(output[k]-0.5) > 0.35)

output[k][tmp_index[0]] = 0.5

pointx[:, k] = np.round(points[:, k] - 0.5*patchw) + output[k][:, 0]*patchw

pointy[:, k] = np.round(points[:, k+5] - 0.5*patchw) + output[k][:, 1]*patchw

points = np.hstack([pointx, pointy])

points = points.astype(np.int32)

return total_boxes, points

# coding: utf-8

# helper.py

import math

import cv2

import numpy as np

def nms(boxes, overlap_threshold, mode='Union'):

"""

non max suppression

Parameters:

----------

box: numpy array n x 5

input bbox array

overlap_threshold: float number

threshold of overlap

mode: float number

how to compute overlap ratio, 'Union' or 'Min'

Returns:

-------

index array of the selected bbox

"""

# if there are no boxes, return an empty list

if len(boxes) == 0:

return []

# if the bounding boxes integers, convert them to floats

if boxes.dtype.kind == "i":

boxes = boxes.astype("float")

# initialize the list of picked indexes

pick = []

# grab the coordinates of the bounding boxes

x1, y1, x2, y2, score = [boxes[:, i] for i in range(5)]

area = (x2 - x1 + 1) * (y2 - y1 + 1)

idxs = np.argsort(score)

# keep looping while some indexes still remain in the indexes list

while len(idxs) > 0:

# grab the last index in the indexes list and add the index value to the list of picked indexes

last = len(idxs) - 1

i = idxs[last]

pick.append(i)

xx1 = np.maximum(x1[i], x1[idxs[:last]])

yy1 = np.maximum(y1[i], y1[idxs[:last]])

xx2 = np.minimum(x2[i], x2[idxs[:last]])

yy2 = np.minimum(y2[i], y2[idxs[:last]])

# compute the width and height of the bounding box

w = np.maximum(0, xx2 - xx1 + 1)

h = np.maximum(0, yy2 - yy1 + 1)

inter = w * h

if mode == 'Min':

overlap = inter / np.minimum(area[i], area[idxs[:last]])

else:

overlap = inter / (area[i] + area[idxs[:last]] - inter)

# delete all indexes from the index list that have

idxs = np.delete(idxs, np.concatenate(([last],

np.where(overlap > overlap_threshold)[0])))

return pick

def adjust_input(in_data):

"""

adjust the input from (h, w, c) to ( 1, c, h, w) for network input

Parameters:

----------

in_data: numpy array of shape (h, w, c)

input data

Returns:

-------

out_data: numpy array of shape (1, c, h, w)

reshaped array

"""

if in_data.dtype is not np.dtype('float32'):

out_data = in_data.astype(np.float32)

else:

out_data = in_data

out_data = out_data.transpose((2,0,1))

out_data = np.expand_dims(out_data, 0)

out_data = (out_data - 127.5)*0.0078125

return out_data

def generate_bbox(map, reg, scale, threshold):

"""

generate bbox from feature map

Parameters:

----------

map: numpy array , n x m x 1

detect score for each position

reg: numpy array , n x m x 4

bbox

scale: float number

scale of this detection

threshold: float number

detect threshold

Returns:

-------

bbox array

"""

stride = 2

cellsize = 12

t_index = np.where(map>threshold)

# find nothing

if t_index[0].size == 0:

return np.array([])

dx1, dy1, dx2, dy2 = [reg[0, i, t_index[0], t_index[1]] for i in range(4)]

reg = np.array([dx1, dy1, dx2, dy2])

score = map[t_index[0], t_index[1]]

boundingbox = np.vstack([np.round((stride*t_index[1]+1)/scale),

np.round((stride*t_index[0]+1)/scale),

np.round((stride*t_index[1]+1+cellsize)/scale),

np.round((stride*t_index[0]+1+cellsize)/scale),

score,

reg])

return boundingbox.T

def detect_first_stage(img, net, scale, threshold):

"""

run PNet for first stage

Parameters:

----------

img: numpy array, bgr order

input image

scale: float number

how much should the input image scale

net: PNet

worker

Returns:

-------

total_boxes : bboxes

"""

height, width, _ = img.shape

hs = int(math.ceil(height * scale))

ws = int(math.ceil(width * scale))

im_data = cv2.resize(img, (ws,hs))

# adjust for the network input

input_buf = adjust_input(im_data)

output = net.predict(input_buf)

boxes = generate_bbox(output[1][0,1,:,:], output[0], scale, threshold)

if boxes.size == 0:

return None

# nms

pick = nms(boxes[:,0:5], 0.5, mode='Union')

boxes = boxes[pick]

return boxes

def detect_first_stage_warpper( args ):

return detect_first_stage(*args)

caffe的实现版本

_init_paths.py

import os.path as osp

import sys

def add_path(path):

if path not in sys.path:

sys.path.insert(0, path)

caffe_path = '/home/zou/caffe'

# Add caffe to PYTHONPATH

caffe_path = osp.join(caffe_path, 'python')

add_path(caffe_path)

demo.py

#!/usr/bin/env python

# -*- coding: utf-8 -*-

import _init_paths

import caffe

import cv2

import numpy as np

def bbreg(boundingbox, reg):

reg = reg.T

# calibrate bouding boxes

if reg.shape[1] == 1:

pass # reshape of reg

w = boundingbox[:,2] - boundingbox[:,0] + 1

h = boundingbox[:,3] - boundingbox[:,1] + 1

bb0 = boundingbox[:,0] + reg[:,0]*w

bb1 = boundingbox[:,1] + reg[:,1]*h

bb2 = boundingbox[:,2] + reg[:,2]*w

bb3 = boundingbox[:,3] + reg[:,3]*h

boundingbox[:,0:4] = np.array([bb0, bb1, bb2, bb3]).T

#print "bb", boundingbox

return boundingbox

def pad(boxesA, w, h):

boxes = boxesA.copy() # shit, value parameter!!!

tmph = boxes[:,3] - boxes[:,1] + 1

tmpw = boxes[:,2] - boxes[:,0] + 1

numbox = boxes.shape[0]

dx = np.ones(numbox)

dy = np.ones(numbox)

edx = tmpw

edy = tmph

x = boxes[:,0:1][:,0]

y = boxes[:,1:2][:,0]

ex = boxes[:,2:3][:,0]

ey = boxes[:,3:4][:,0]

tmp = np.where(ex > w)[0]

if tmp.shape[0] != 0:

edx[tmp] = -ex[tmp] + w-1 + tmpw[tmp]

ex[tmp] = w-1

tmp = np.where(ey > h)[0]

if tmp.shape[0] != 0:

edy[tmp] = -ey[tmp] + h-1 + tmph[tmp]

ey[tmp] = h-1

tmp = np.where(x < 1)[0]

if tmp.shape[0] != 0:

dx[tmp] = 2 - x[tmp]

x[tmp] = np.ones_like(x[tmp])

tmp = np.where(y < 1)[0]

if tmp.shape[0] != 0:

dy[tmp] = 2 - y[tmp]

y[tmp] = np.ones_like(y[tmp])

# for python index from 0, while matlab from 1

dy = np.maximum(0, dy-1)

dx = np.maximum(0, dx-1)

y = np.maximum(0, y-1)

x = np.maximum(0, x-1)

edy = np.maximum(0, edy-1)

edx = np.maximum(0, edx-1)

ey = np.maximum(0, ey-1)

ex = np.maximum(0, ex-1)

#print 'boxes', boxes

return [dy, edy, dx, edx, y, ey, x, ex, tmpw, tmph]

def rerec(bboxA):

# convert bboxA to square

w = bboxA[:,2] - bboxA[:,0]

h = bboxA[:,3] - bboxA[:,1]

l = np.maximum(w,h).T

bboxA[:,0] = bboxA[:,0] + w*0.5 - l*0.5

bboxA[:,1] = bboxA[:,1] + h*0.5 - l*0.5

bboxA[:,2:4] = bboxA[:,0:2] + np.repeat([l], 2, axis = 0).T

return bboxA

def nms(boxes, threshold, type):

"""nms

:boxes: [:,0:5]

:threshold: 0.5 like

:type: 'Min' or others

:returns: TODO

"""

if boxes.shape[0] == 0:

return np.array([])

x1 = boxes[:,0]

y1 = boxes[:,1]

x2 = boxes[:,2]

y2 = boxes[:,3]

s = boxes[:,4]

area = np.multiply(x2-x1+1, y2-y1+1)

I = np.array(s.argsort()) # read s using I

pick = [];

while len(I) > 0:

xx1 = np.maximum(x1[I[-1]], x1[I[0:-1]])

yy1 = np.maximum(y1[I[-1]], y1[I[0:-1]])

xx2 = np.minimum(x2[I[-1]], x2[I[0:-1]])

yy2 = np.minimum(y2[I[-1]], y2[I[0:-1]])

w = np.maximum(0.0, xx2 - xx1 + 1)

h = np.maximum(0.0, yy2 - yy1 + 1)

inter = w * h

if type == 'Min':

o = inter / np.minimum(area[I[-1]], area[I[0:-1]])

else:

o = inter / (area[I[-1]] + area[I[0:-1]] - inter)

pick.append(I[-1])

I = I[np.where( o <= threshold)[0]]

return pick

def generateBoundingBox(map, reg, scale, t):

stride = 2

cellsize = 12

map = map.T

dx1 = reg[0,:,:].T

dy1 = reg[1,:,:].T

dx2 = reg[2,:,:].T

dy2 = reg[3,:,:].T

(x, y) = np.where(map >= t)

yy = y

xx = x

'''

if y.shape[0] == 1: # only one point exceed threshold

y = y.T

x = x.T

score = map[x,y].T

dx1 = dx1.T

dy1 = dy1.T

dx2 = dx2.T

dy2 = dy2.T

# a little stange, when there is only one bb created by PNet

#print "1: x,y", x,y

a = (x*map.shape[1]) + (y+1)

x = a/map.shape[0]

y = a%map.shape[0] - 1

#print "2: x,y", x,y

else:

score = map[x,y]

'''

score = map[x,y]

reg = np.array([dx1[x,y], dy1[x,y], dx2[x,y], dy2[x,y]])

if reg.shape[0] == 0:

pass

boundingbox = np.array([yy, xx]).T

bb1 = np.fix((stride * (boundingbox) + 1) / scale).T # matlab index from 1, so with "boundingbox-1"

bb2 = np.fix((stride * (boundingbox) + cellsize - 1 + 1) / scale).T # while python don't have to

score = np.array([score])

boundingbox_out = np.concatenate((bb1, bb2, score, reg), axis=0)

return boundingbox_out.T

def drawBoxes(im, boxes):

x1 = boxes[:,0]

y1 = boxes[:,1]

x2 = boxes[:,2]

y2 = boxes[:,3]

for i in range(x1.shape[0]):

cv2.rectangle(im, (int(x1[i]), int(y1[i])), (int(x2[i]), int(y2[i])), (0,255,0), 1)

return im

def drawPoints(im,points):

for i in range(points.shape[0]):

left_eye = (int(points[i][0]),int(points[i][5]))

cv2.circle(im, left_eye,2, (0,0,255), 2)

right_eye = (int(points[i][1]),int(points[i][6]))

cv2.circle(im, right_eye,2, (0,0,255), 2)

nose = (int(points[i][2]),int(points[i][7]))

cv2.circle(im, nose,2, (0,0,255), 2)

left_mouth = (int(points[i][3]),int(points[i][8]))

cv2.circle(im, left_mouth,2, (0,0,255), 2)

right_mouth = (int(points[i][4]),int(points[i][9]))

cv2.circle(im, right_mouth,2, (0,0,255), 2)

return im

def drawPatch(im,points):

for i in range(points.shape[0]):

left_eye = (int(points[i][0]),int(points[i][5]))

right_eye = (int(points[i][1]),int(points[i][6]))

nose = (int(points[i][2]),int(points[i][7]))

left_mouth = (int(points[i][3]),int(points[i][8]))

right_mouth = (int(points[i][4]),int(points[i][9]))

eye_length = np.sqrt((left_eye[0]-right_eye[0])*(left_eye[0]-right_eye[0])+(left_eye[1]-right_eye[1])*(left_eye[1]-right_eye[1]))

mouth_length = np.sqrt((left_mouth[0]-right_mouth[0])*(left_mouth[0]-right_mouth[0])+(left_mouth[1]-right_mouth[1])*(left_mouth[1]-right_mouth[1]))

t11_x = left_eye[0]

t11_y = left_eye[1] - 0.8*eye_length

t12_x = left_eye[0] + eye_length

t12_y = left_eye[1] - 0.4*eye_length

cv2.rectangle(im, (int(t11_x), int(t11_y)), (int(t12_x), int(t12_y)), (0,255,0), 1)

t21_x = (left_eye[0] + right_eye[0])/2 - 0.15*eye_length

t21_y = (left_eye[1] + right_eye[1])/2 - 0.3*eye_length

t22_x = (left_eye[0] + right_eye[0])/2 + 0.15*eye_length

t22_y = (left_eye[1] + right_eye[1])/2

cv2.rectangle(im, (int(t21_x), int(t21_y)), (int(t22_x), int(t22_y)), (0,255,0), 1)

t31_x = (left_eye[0] +nose[0])/2-0.1*eye_length

t31_y = ((left_eye[1] + right_eye[1])/2 + nose[1])/2 - 0.1*eye_length

t32_x = (left_eye[0] +nose[0])/2+0.1*eye_length

t32_y = ((left_eye[1] + right_eye[1])/2 + nose[1])/2 + 0.1*eye_length

cv2.rectangle(im, (int(t31_x), int(t31_y)), (int(t32_x), int(t32_y)), (0,255,0), 1)

t41_x = (right_eye[0] +nose[0])/2-0.1*eye_length

t41_y = ((left_eye[1] + right_eye[1])/2 + nose[1])/2 - 0.1*eye_length

t42_x = (right_eye[0] +nose[0])/2+0.1*eye_length

t42_y = ((left_eye[1] + right_eye[1])/2 + nose[1])/2 + 0.1*eye_length

cv2.rectangle(im, (int(t41_x), int(t41_y)), (int(t42_x), int(t42_y)), (0,255,0), 1)

t51_x = nose[0]-0.1*eye_length

t51_y = nose[1]-0.1*eye_length

t52_x = nose[0]+0.1*eye_length

t52_y = nose[1]+0.1*eye_length

cv2.rectangle(im, (int(t51_x), int(t51_y)), (int(t52_x), int(t52_y)), (0,255,0), 1)

t61_x = nose[0]-0.1*eye_length

t61_y = nose[1]+0.2*eye_length

t62_x = nose[0]+0.1*eye_length

t62_y = nose[1]+0.4*eye_length

cv2.rectangle(im, (int(t61_x), int(t61_y)), (int(t62_x), int(t62_y)), (0,255,0), 1)

t71_x = left_mouth[0] - 0.7*mouth_length

t71_y = left_mouth[1] - mouth_length

t72_x = left_mouth[0]

t72_y = left_mouth[1]

cv2.rectangle(im, (int(t71_x), int(t71_y)), (int(t72_x), int(t72_y)), (0,255,0), 1)

t81_x = right_mouth[0]

t81_y = right_mouth[1] - mouth_length

t82_x = right_mouth[0] + 0.7*mouth_length

t82_y = left_mouth[1]

cv2.rectangle(im, (int(t81_x), int(t81_y)), (int(t82_x), int(t82_y)), (0,255,0), 1)

return im

from time import time

_tstart_stack = []

def tic():

_tstart_stack.append(time())

def toc(fmt="Elapsed: %s s"):

print fmt % (time()-_tstart_stack.pop())

def detect_face(img, minsize, PNet, RNet, ONet, threshold, fastresize, factor):

img2 = img.copy()

factor_count = 0

total_boxes = np.zeros((0,9), np.float)

points = []

h = img.shape[0]

w = img.shape[1]

minl = min(h, w)

img = img.astype(float)

m = 12.0/minsize

minl = minl*m

# create scale pyramid

scales = []

while minl >= 12:

scales.append(m * pow(factor, factor_count))

minl *= factor

factor_count += 1

# first stage

for scale in scales:

hs = int(np.ceil(h*scale))

ws = int(np.ceil(w*scale))

if fastresize:

im_data = (img-127.5)*0.0078125 # [0,255] -> [-1,1]

im_data = cv2.resize(im_data, (ws,hs)) # default is bilinear

else:

im_data = cv2.resize(img, (ws,hs)) # default is bilinear

im_data = (im_data-127.5)*0.0078125 # [0,255] -> [-1,1]

im_data = np.swapaxes(im_data, 0, 2)

im_data = np.array([im_data], dtype = np.float)

PNet.blobs['data'].reshape(1, 3, ws, hs)

PNet.blobs['data'].data[...] = im_data

out = PNet.forward()

boxes = generateBoundingBox(out['prob1'][0,1,:,:], out['conv4-2'][0], scale, threshold[0])

if boxes.shape[0] != 0:

pick = nms(boxes, 0.5, 'Union')

if len(pick) > 0 :

boxes = boxes[pick, :]

if boxes.shape[0] != 0:

total_boxes = np.concatenate((total_boxes, boxes), axis=0)

#####

# 1 #

#####

numbox = total_boxes.shape[0]

if numbox > 0:

# nms

pick = nms(total_boxes, 0.7, 'Union')

total_boxes = total_boxes[pick, :]

# revise and convert to square

regh = total_boxes[:,3] - total_boxes[:,1]

regw = total_boxes[:,2] - total_boxes[:,0]

t1 = total_boxes[:,0] + total_boxes[:,5]*regw

t2 = total_boxes[:,1] + total_boxes[:,6]*regh

t3 = total_boxes[:,2] + total_boxes[:,7]*regw

t4 = total_boxes[:,3] + total_boxes[:,8]*regh

t5 = total_boxes[:,4]

total_boxes = np.array([t1,t2,t3,t4,t5]).T

total_boxes = rerec(total_boxes) # convert box to square

total_boxes[:,0:4] = np.fix(total_boxes[:,0:4])

[dy, edy, dx, edx, y, ey, x, ex, tmpw, tmph] = pad(total_boxes, w, h)

#print total_boxes.shape

#print total_boxes

numbox = total_boxes.shape[0]

if numbox > 0:

# second stage

# construct input for RNet

tempimg = np.zeros((numbox, 24, 24, 3)) # (24, 24, 3, numbox)

for k in range(numbox):

tmp = np.zeros((tmph[k], tmpw[k],3))

tmp[dy[k]:edy[k]+1, dx[k]:edx[k]+1] = img[y[k]:ey[k]+1, x[k]:ex[k]+1]

tempimg[k,:,:,:] = cv2.resize(tmp, (24, 24))

tempimg = (tempimg-127.5)*0.0078125 # done in imResample function wrapped by python

# RNet

tempimg = np.swapaxes(tempimg, 1, 3)

RNet.blobs['data'].reshape(numbox, 3, 24, 24)

RNet.blobs['data'].data[...] = tempimg

out = RNet.forward()

score = out['prob1'][:,1]

pass_t = np.where(score>threshold[1])[0]

score = np.array([score[pass_t]]).T

total_boxes = np.concatenate( (total_boxes[pass_t, 0:4], score), axis = 1)

mv = out['conv5-2'][pass_t, :].T

if total_boxes.shape[0] > 0:

pick = nms(total_boxes, 0.7, 'Union')

if len(pick) > 0 :

total_boxes = total_boxes[pick, :]

total_boxes = bbreg(total_boxes, mv[:, pick])

total_boxes = rerec(total_boxes)

#####

# 2 #

#####

numbox = total_boxes.shape[0]

if numbox > 0:

# third stage

total_boxes = np.fix(total_boxes)

[dy, edy, dx, edx, y, ey, x, ex, tmpw, tmph] = pad(total_boxes, w, h)

tempimg = np.zeros((numbox, 48, 48, 3))

for k in range(numbox):

tmp = np.zeros((tmph[k], tmpw[k],3))

tmp[dy[k]:edy[k]+1, dx[k]:edx[k]+1] = img[y[k]:ey[k]+1, x[k]:ex[k]+1]

tempimg[k,:,:,:] = cv2.resize(tmp, (48, 48))

tempimg = (tempimg-127.5)*0.0078125 # [0,255] -> [-1,1]

# ONet

tempimg = np.swapaxes(tempimg, 1, 3)

ONet.blobs['data'].reshape(numbox, 3, 48, 48)

ONet.blobs['data'].data[...] = tempimg

out = ONet.forward()

score = out['prob1'][:,1]

points = out['conv6-3']

pass_t = np.where(score>threshold[2])[0]

points = points[pass_t, :]

score = np.array([score[pass_t]]).T

total_boxes = np.concatenate( (total_boxes[pass_t, 0:4], score), axis=1)

mv = out['conv6-2'][pass_t, :].T

w = total_boxes[:,3] - total_boxes[:,1] + 1

h = total_boxes[:,2] - total_boxes[:,0] + 1

points[:, 0:5] = np.tile(w, (5,1)).T * points[:, 0:5] + np.tile(total_boxes[:,0], (5,1)).T - 1

points[:, 5:10] = np.tile(h, (5,1)).T * points[:, 5:10] + np.tile(total_boxes[:,1], (5,1)).T -1

if total_boxes.shape[0] > 0:

total_boxes = bbreg(total_boxes, mv[:,:])

pick = nms(total_boxes, 0.7, 'Min')

if len(pick) > 0 :

total_boxes = total_boxes[pick, :]

points = points[pick, :]

#####

# 3 #

#####

return total_boxes, points

def initFaceDetector():

minsize = 20

caffe_model_path = "/home/duino/iactive/mtcnn/model"

threshold = [0.6, 0.7, 0.7]

factor = 0.709

caffe.set_mode_cpu()

PNet = caffe.Net(caffe_model_path+"/det1.prototxt", caffe_model_path+"/det1.caffemodel", caffe.TEST)

RNet = caffe.Net(caffe_model_path+"/det2.prototxt", caffe_model_path+"/det2.caffemodel", caffe.TEST)

ONet = caffe.Net(caffe_model_path+"/det3.prototxt", caffe_model_path+"/det3.caffemodel", caffe.TEST)

return (minsize, PNet, RNet, ONet, threshold, factor)

def haveFace(img, facedetector):

minsize = facedetector[0]

PNet = facedetector[1]

RNet = facedetector[2]

ONet = facedetector[3]

threshold = facedetector[4]

factor = facedetector[5]

if max(img.shape[0], img.shape[1]) < minsize:

return False, []

img_matlab = img.copy()

tmp = img_matlab[:,:,2].copy()

img_matlab[:,:,2] = img_matlab[:,:,0]

img_matlab[:,:,0] = tmp

#tic()

boundingboxes, points = detect_face(img_matlab, minsize, PNet, RNet, ONet, threshold, False, factor)

#toc()

containFace = (True, False)[boundingboxes.shape[0]==0]

return containFace, boundingboxes

def main():

minsize = 50

caffe_model_path = "/home/zou/mtcnn/model"

threshold = [0.6, 0.7, 0.7]

factor = 0.709

caffe.set_mode_gpu()

PNet = caffe.Net(caffe_model_path+"/det1.prototxt", caffe_model_path+"/det1.caffemodel", caffe.TEST)

RNet = caffe.Net(caffe_model_path+"/det2.prototxt", caffe_model_path+"/det2.caffemodel", caffe.TEST)

ONet = caffe.Net(caffe_model_path+"/det3.prototxt", caffe_model_path+"/det3.caffemodel", caffe.TEST)

camera = cv2.VideoCapture(0)

while True:

_, img = camera.read()

h,w = img.shape[:2]

if h>=w:

w = int(w/(h/500.0))

h = 500;

else:

h = int(h/(w/500.0))

w = 500

img = cv2.resize(img,(w,h))

img_matlab = img.copy()

tmp = img_matlab[:,:,2].copy()

img_matlab[:,:,2] = img_matlab[:,:,0]

img_matlab[:,:,0] = tmp

# check rgb position

tic()

boundingboxes, points = detect_face(img_matlab, minsize, PNet, RNet, ONet, threshold, False, factor)

toc()

if (len(boundingboxes)>0)&1:

img = drawBoxes(img, boundingboxes)

img = drawPoints(img, points)

if (len(boundingboxes)>0)&0:

img = drawBoxes(img, boundingboxes)

img = drawPatch(img, points)

cv2.imshow('img', img)

ch = cv2.waitKey(1)

if ch == 27:

break

#if boundingboxes.shape[0] > 0:

# error.append[imgpath]

#print error

if __name__ == "__main__":

main()

C++实现

stdafx.h

// stdafx.h : 标准系统包含文件的包含文件,

// 或是经常使用但不常更改的

// 特定于项目的包含文件

//

#pragma once

#include "targetver.h"

#include <stdio.h>

#include <tchar.h>

#include <caffe/caffe.hpp>

#ifdef USE_OPENCV

#include <opencv2/core/core.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/imgproc/imgproc.hpp>

#include <opencv2/videoio.hpp>

#endif // USE_OPENCV

#include <algorithm>

#include <iosfwd>

#include <memory>

#include <string>

#include <utility>

#include <vector>

#ifdef WITH_PYTHON_LAYER

#include <boost/python.hpp>

#endif

#include <string>

#include <vector>

#include "caffe/layer.hpp"

#include "caffe/layer_factory.hpp"

#include "caffe/layers/input_layer.hpp"

#include "caffe/layers/inner_product_layer.hpp"

#include "caffe/layers/prelu_layer.hpp"

#include "caffe/layers/conv_layer.hpp"

#include "caffe/layers/pooling_layer.hpp"

#include "caffe/layers/softmax_layer.hpp"

#include "caffe/layers/memory_data_layer.hpp"

#include "caffe/layers/dropout_layer.hpp"

#include "caffe/proto/caffe.pb.h"

#ifdef USE_CUDNN

#include "caffe/layers/cudnn_conv_layer.hpp"

#include "caffe/layers/cudnn_pooling_layer.hpp"

#include "caffe/layers/cudnn_relu_layer.hpp"

#include "caffe/layers/cudnn_softmax_layer.hpp"

#endif

#ifdef WITH_PYTHON_LAYER

#include "caffe/layers/python_layer.hpp"

#endif

using namespace caffe; // NOLINT(build/namespaces)

extern INSTANTIATE_CLASS(InputLayer);

extern INSTANTIATE_CLASS(InnerProductLayer);

extern INSTANTIATE_CLASS(ConvolutionLayer);

extern INSTANTIATE_CLASS(PReLULayer);

extern INSTANTIATE_CLASS(PoolingLayer);

extern INSTANTIATE_CLASS(SoftmaxLayer);

extern INSTANTIATE_CLASS(MemoryDataLayer);

extern INSTANTIATE_CLASS(DropoutLayer);

// TODO: 在此处引用程序需要的其他头文件

MTCNN.cpp

// MTCNN_VS2015.cpp : 定义控制台应用程序的入口点。

//

#include "stdafx.h"

// c++

#include <string>

#include <vector>

// boost

#include "boost/make_shared.hpp"

//#define CPU_ONLY

using namespace caffe;

#define FROM_VIDEO 1

string resultdir = "result";

typedef struct FaceRect {

float x1;

float y1;

float x2;

float y2;

float score; /**< Larger score should mean higher confidence. */

} FaceRect;

typedef struct FacePts {

float x[5], y[5];

} FacePts;

typedef struct FaceInfo {

FaceRect bbox;

cv::Vec4f regression;

FacePts facePts;

double roll;

double pitch;

double yaw;

} FaceInfo;

template<typename Dtype>

Dtype max(Dtype x, Dtype y)

{

return x>=y ? x : y;

}

template<typename Dtype>

Dtype min(Dtype x, Dtype y)

{

return x < y ? x : y;

}

class MTCNN {

public:

MTCNN(const string& proto_model_dir);

void Detect(const cv::Mat& img, std::vector<FaceInfo> &faceInfo, int minSize, double* threshold, double factor);

private:

bool CvMatToDatumSignalChannel(const cv::Mat& cv_mat, Datum* datum);

//void Preprocess(const cv::Mat& img,

// std::vector<cv::Mat>* input_channels);

void WrapInputLayer(std::vector<cv::Mat>* input_channels, Blob<float>* input_layer,

const int height, const int width);

//void SetMean();

void GenerateBoundingBox(Blob<float>* confidence, Blob<float>* reg,

float scale, float thresh, int image_width, int image_height);

void ClassifyFace_MulImage(const std::vector<FaceInfo> ®ressed_rects, cv::Mat &sample_single,

boost::shared_ptr<Net<float> >& net, double thresh, char netName);

std::vector<FaceInfo> NonMaximumSuppression(std::vector<FaceInfo>& bboxes, float thresh, char methodType);

void Bbox2Square(std::vector<FaceInfo>& bboxes);

void Padding(int img_w, int img_h);

std::vector<FaceInfo> BoxRegress(std::vector<FaceInfo> &faceInfo_, int stage);

//void RegressPoint(const std::vector<FaceInfo>& faceInfo);

private:

boost::shared_ptr<Net<float> > PNet_;

boost::shared_ptr<Net<float> > RNet_;

boost::shared_ptr<Net<float> > ONet_;

// x1,y1,x2,t2 and score

std::vector<FaceInfo> condidate_rects_;

std::vector<FaceInfo> total_boxes_;

std::vector<FaceInfo> regressed_rects_;

std::vector<FaceInfo> regressed_pading_;

std::vector<cv::Mat> crop_img_;

int curr_feature_map_w_;

int curr_feature_map_h_;

int num_channels_;

};

// compare score

bool CompareBBox(const FaceInfo & a, const FaceInfo & b) {

return a.bbox.score > b.bbox.score;

}

// methodType : u is IoU(Intersection Over Union)

// methodType : m is IoM(Intersection Over Maximum)

std::vector<FaceInfo> MTCNN::NonMaximumSuppression(std::vector<FaceInfo>& bboxes,

float thresh, char methodType) {

std::vector<FaceInfo> bboxes_nms;

std::sort(bboxes.begin(), bboxes.end(), CompareBBox);

int32_t select_idx = 0;

int32_t num_bbox = static_cast<int32_t>(bboxes.size());

std::vector<int32_t> mask_merged(num_bbox, 0);

bool all_merged = false;

while (!all_merged) {

while (select_idx < num_bbox && mask_merged[select_idx] == 1)

select_idx++;

if (select_idx == num_bbox) {

all_merged = true;

continue;

}

bboxes_nms.push_back(bboxes[select_idx]);

mask_merged[select_idx] = 1;

FaceRect select_bbox = bboxes[select_idx].bbox;

float area1 = static_cast<float>((select_bbox.x2 - select_bbox.x1 + 1) * (select_bbox.y2 - select_bbox.y1 + 1));

float x1 = static_cast<float>(select_bbox.x1);

float y1 = static_cast<float>(select_bbox.y1);

float x2 = static_cast<float>(select_bbox.x2);

float y2 = static_cast<float>(select_bbox.y2);

select_idx++;

for (int32_t i = select_idx; i < num_bbox; i++) {

if (mask_merged[i] == 1)

continue;

FaceRect& bbox_i = bboxes[i].bbox;

float x = std::max<float>(x1, static_cast<float>(bbox_i.x1));

float y = std::max<float>(y1, static_cast<float>(bbox_i.y1));

float w = std::min<float>(x2, static_cast<float>(bbox_i.x2)) - x + 1;

float h = std::min<float>(y2, static_cast<float>(bbox_i.y2)) - y + 1;

if (w <= 0 || h <= 0)

continue;

float area2 = static_cast<float>((bbox_i.x2 - bbox_i.x1 + 1) * (bbox_i.y2 - bbox_i.y1 + 1));

float area_intersect = w * h;

switch (methodType) {

case 'u':

if (static_cast<float>(area_intersect) / (area1 + area2 - area_intersect) > thresh)

mask_merged[i] = 1;

break;

case 'm':

if (static_cast<float>(area_intersect) / min(area1, area2) > thresh)

mask_merged[i] = 1;

break;

default:

break;

}

}

}

return bboxes_nms;

}

void MTCNN::Bbox2Square(std::vector<FaceInfo>& bboxes) {

for (int i = 0; i < bboxes.size(); i++) {

float h = bboxes[i].bbox.x2 - bboxes[i].bbox.x1;

float w = bboxes[i].bbox.y2 - bboxes[i].bbox.y1;

float side = h > w ? h : w;

bboxes[i].bbox.x1 += (h - side)*0.5;

bboxes[i].bbox.y1 += (w - side)*0.5;

bboxes[i].bbox.x2 = (int)(bboxes[i].bbox.x1 + side);

bboxes[i].bbox.y2 = (int)(bboxes[i].bbox.y1 + side);

bboxes[i].bbox.x1 = (int)(bboxes[i].bbox.x1);

bboxes[i].bbox.y1 = (int)(bboxes[i].bbox.y1);

}

}

std::vector<FaceInfo> MTCNN::BoxRegress(std::vector<FaceInfo>& faceInfo, int stage) {

std::vector<FaceInfo> bboxes;

for (int bboxId = 0; bboxId < faceInfo.size(); bboxId++) {

FaceRect faceRect;

FaceInfo tempFaceInfo;

float regw = faceInfo[bboxId].bbox.y2 - faceInfo[bboxId].bbox.y1;

regw += (stage == 1) ? 0 : 1;

float regh = faceInfo[bboxId].bbox.x2 - faceInfo[bboxId].bbox.x1;

regh += (stage == 1) ? 0 : 1;

faceRect.y1 = faceInfo[bboxId].bbox.y1 + regw * faceInfo[bboxId].regression[0];

faceRect.x1 = faceInfo[bboxId].bbox.x1 + regh * faceInfo[bboxId].regression[1];

faceRect.y2 = faceInfo[bboxId].bbox.y2 + regw * faceInfo[bboxId].regression[2];

faceRect.x2 = faceInfo[bboxId].bbox.x2 + regh * faceInfo[bboxId].regression[3];

faceRect.score = faceInfo[bboxId].bbox.score;

tempFaceInfo.bbox = faceRect;

tempFaceInfo.regression = faceInfo[bboxId].regression;

if (stage == 3)

tempFaceInfo.facePts = faceInfo[bboxId].facePts;

bboxes.push_back(tempFaceInfo);

}

return bboxes;

}

// compute the padding coordinates (pad the bounding boxes to square)

void MTCNN::Padding(int img_w, int img_h) {

for (int i = 0; i < regressed_rects_.size(); i++) {

FaceInfo tempFaceInfo;

tempFaceInfo = regressed_rects_[i];

tempFaceInfo.bbox.y2 = (regressed_rects_[i].bbox.y2 >= img_w) ? img_w : regressed_rects_[i].bbox.y2;

tempFaceInfo.bbox.x2 = (regressed_rects_[i].bbox.x2 >= img_h) ? img_h : regressed_rects_[i].bbox.x2;

tempFaceInfo.bbox.y1 = (regressed_rects_[i].bbox.y1 < 1) ? 1 : regressed_rects_[i].bbox.y1;

tempFaceInfo.bbox.x1 = (regressed_rects_[i].bbox.x1 < 1) ? 1 : regressed_rects_[i].bbox.x1;

regressed_pading_.push_back(tempFaceInfo);

}

}

void MTCNN::GenerateBoundingBox(Blob<float>* confidence, Blob<float>* reg,

float scale, float thresh, int image_width, int image_height) {

int stride = 2;

int cellSize = 12;

int curr_feature_map_w_ = std::ceil((image_width - cellSize)*1.0 / stride) + 1;

int curr_feature_map_h_ = std::ceil((image_height - cellSize)*1.0 / stride) + 1;

//std::cout << "Feature_map_size:"<< curr_feature_map_w_ <<" "<<curr_feature_map_h_<<std::endl;

int regOffset = curr_feature_map_w_*curr_feature_map_h_;

// the first count numbers are confidence of face

int count = confidence->count() / 2;

const float* confidence_data = confidence->cpu_data();

confidence_data += count;

const float* reg_data = reg->cpu_data();

condidate_rects_.clear();

for (int i = 0; i < count; i++) {

if (*(confidence_data + i) >= thresh) {

int y = i / curr_feature_map_w_;

int x = i - curr_feature_map_w_ * y;

float xTop = (int)((x*stride + 1) / scale);

float yTop = (int)((y*stride + 1) / scale);

float xBot = (int)((x*stride + cellSize - 1 + 1) / scale);

float yBot = (int)((y*stride + cellSize - 1 + 1) / scale);

FaceRect faceRect;

faceRect.x1 = xTop;

faceRect.y1 = yTop;

faceRect.x2 = xBot;

faceRect.y2 = yBot;

faceRect.score = *(confidence_data + i);

FaceInfo faceInfo;

faceInfo.bbox = faceRect;

faceInfo.regression = cv::Vec4f(reg_data[i + 0 * regOffset], reg_data[i + 1 * regOffset], reg_data[i + 2 * regOffset], reg_data[i + 3 * regOffset]);

condidate_rects_.push_back(faceInfo);

}

}

}

MTCNN::MTCNN(const std::string &proto_model_dir) {

#ifdef CPU_ONLY

Caffe::set_mode(Caffe::CPU);

#else

Caffe::set_mode(Caffe::GPU);

#endif

/* Load the network. */

PNet_.reset(new Net<float>((proto_model_dir + "/det1.prototxt"), TEST));

PNet_->CopyTrainedLayersFrom(proto_model_dir + "/det1.caffemodel");

CHECK_EQ(PNet_->num_inputs(), 1) << "Network should have exactly one input.";

CHECK_EQ(PNet_->num_outputs(), 2) << "Network should have exactly two output, one"

" is bbox and another is confidence.";

//RNet_.reset(new Net<float>((proto_model_dir+"/det2.prototxt"), TEST));

RNet_.reset(new Net<float>((proto_model_dir + "/det2_input.prototxt"), TEST));

RNet_->CopyTrainedLayersFrom(proto_model_dir + "/det2.caffemodel");

// CHECK_EQ(RNet_->num_inputs(), 0) << "Network should have exactly one input.";

// CHECK_EQ(RNet_->num_outputs(),3) << "Network should have exactly two output, one"

// " is bbox and another is confidence.";

ONet_.reset(new Net<float>((proto_model_dir + "/det3_input.prototxt"), TEST));

ONet_->CopyTrainedLayersFrom(proto_model_dir + "/det3.caffemodel");

// CHECK_EQ(ONet_->num_inputs(), 1) << "Network should have exactly one input.";

// CHECK_EQ(ONet_->num_outputs(),3) << "Network should have exactly three output, one"

// " is bbox and another is confidence.";

Blob<float>* input_layer;

input_layer = PNet_->input_blobs()[0];

num_channels_ = input_layer->channels();

CHECK(num_channels_ == 3 || num_channels_ == 1) << "Input layer should have 1 or 3 channels.";

}

void MTCNN::WrapInputLayer(std::vector<cv::Mat>* input_channels,

Blob<float>* input_layer, const int height, const int width) {

float* input_data = input_layer->mutable_cpu_data();

for (int i = 0; i < input_layer->channels(); ++i) {

cv::Mat channel(height, width, CV_32FC1, input_data);

input_channels->push_back(channel);

input_data += width * height;

}

}

// multi test image pass a forward

void MTCNN::ClassifyFace_MulImage(const std::vector<FaceInfo>& regressed_rects, cv::Mat &sample_single,

boost::shared_ptr<Net<float> >& net, double thresh, char netName) {

condidate_rects_.clear();

int numBox = regressed_rects.size();

std::vector<Datum> datum_vector;

boost::shared_ptr<MemoryDataLayer<float> > mem_data_layer;

mem_data_layer = boost::static_pointer_cast<MemoryDataLayer<float>>(net->layers()[0]);

int input_width = mem_data_layer->width();

int input_height = mem_data_layer->height();

// load crop_img data to datum

for (int i = 0; i < numBox; i++) {

int pad_top = std::abs(regressed_pading_[i].bbox.x1 - regressed_rects[i].bbox.x1);

int pad_left = std::abs(regressed_pading_[i].bbox.y1 - regressed_rects[i].bbox.y1);

int pad_right = std::abs(regressed_pading_[i].bbox.y2 - regressed_rects[i].bbox.y2);

int pad_bottom = std::abs(regressed_pading_[i].bbox.x2 - regressed_rects[i].bbox.x2);

cv::Mat crop_img = sample_single(cv::Range(regressed_pading_[i].bbox.y1 - 1, regressed_pading_[i].bbox.y2),

cv::Range(regressed_pading_[i].bbox.x1 - 1, regressed_pading_[i].bbox.x2));

cv::copyMakeBorder(crop_img, crop_img, pad_left, pad_right, pad_top, pad_bottom, cv::BORDER_CONSTANT, cv::Scalar(0));

cv::resize(crop_img, crop_img, cv::Size(input_width, input_height), 0, 0, cv::INTER_AREA);

crop_img = (crop_img - 127.5)*0.0078125;

Datum datum;

CvMatToDatumSignalChannel(crop_img, &datum);

datum_vector.push_back(datum);

}

regressed_pading_.clear();

/* extract the features and store */

mem_data_layer->set_batch_size(numBox);

mem_data_layer->AddDatumVector(datum_vector);

/* fire the network */

float no_use_loss = 0;

net->Forward(&no_use_loss);

// CHECK(reinterpret_cast<float*>(crop_img_set.at(0).data) == net->input_blobs()[0]->cpu_data())

// << "Input channels are not wrapping the input layer of the network.";

// return RNet/ONet result

std::string outPutLayerName = (netName == 'r' ? "conv5-2" : "conv6-2");

std::string pointsLayerName = "conv6-3";

const boost::shared_ptr<Blob<float> > reg = net->blob_by_name(outPutLayerName);

const boost::shared_ptr<Blob<float> > confidence = net->blob_by_name("prob1");

// ONet points_offset != NULL

const boost::shared_ptr<Blob<float> > points_offset = net->blob_by_name(pointsLayerName);

const float* confidence_data = confidence->cpu_data();

const float* reg_data = reg->cpu_data();

for (int i = 0; i<numBox; i++) {

if (*(confidence_data + i * 2 + 1) > thresh) {

FaceRect faceRect;

faceRect.x1 = regressed_rects[i].bbox.x1;

faceRect.y1 = regressed_rects[i].bbox.y1;

faceRect.x2 = regressed_rects[i].bbox.x2;

faceRect.y2 = regressed_rects[i].bbox.y2;

faceRect.score = *(confidence_data + i * 2 + 1);

FaceInfo faceInfo;

faceInfo.bbox = faceRect;

faceInfo.regression = cv::Vec4f(reg_data[4 * i + 0], reg_data[4 * i + 1], reg_data[4 * i + 2], reg_data[4 * i + 3]);

// x x x x x y y y y y

if (netName == 'o') {

FacePts face_pts;

const float* points_data = points_offset->cpu_data();

float w = faceRect.y2 - faceRect.y1 + 1;

float h = faceRect.x2 - faceRect.x1 + 1;

for (int j = 0; j < 5; j++) {

face_pts.y[j] = faceRect.y1 + *(points_data + j + 10 * i) * h - 1;

face_pts.x[j] = faceRect.x1 + *(points_data + j + 5 + 10 * i) * w - 1;

}

faceInfo.facePts = face_pts;

}

condidate_rects_.push_back(faceInfo);

}

}

}

bool MTCNN::CvMatToDatumSignalChannel(const cv::Mat& cv_mat, Datum* datum) {

if (cv_mat.empty())

return false;

int channels = cv_mat.channels();

datum->set_channels(cv_mat.channels());

datum->set_height(cv_mat.rows);

datum->set_width(cv_mat.cols);

datum->set_label(0);

datum->clear_data();

datum->clear_float_data();

datum->set_encoded(false);

int datum_height = datum->height();

int datum_width = datum->width();

if (channels == 3) {

for (int c = 0; c < channels; c++) {

for (int h = 0; h < datum_height; ++h) {

for (int w = 0; w < datum_width; ++w) {

const float* ptr = cv_mat.ptr<float>(h);

datum->add_float_data(ptr[w*channels + c]);

}

}

}

}

return true;

}

void MTCNN::Detect(const cv::Mat& image, std::vector<FaceInfo>& faceInfo, int minSize, double* threshold, double factor) {

// 2~3ms

// invert to RGB color space and float type

cv::Mat sample_single, resized;

image.convertTo(sample_single, CV_32FC3);

cv::cvtColor(sample_single, sample_single, cv::COLOR_BGR2RGB);

sample_single = sample_single.t();

int height = image.rows;

int width = image.cols;

int minWH = min(height, width);

int factor_count = 0;

double m = 12. / minSize;

minWH *= m;

std::vector<double> scales;

while (minWH >= 12)

{

scales.push_back(m * std::pow(factor, factor_count));

minWH *= factor;

++factor_count;

}

// 11ms main consum

Blob<float>* input_layer = PNet_->input_blobs()[0];

for (int i = 0; i < factor_count; i++)

{

double scale = scales[i];

int ws = std::ceil(height*scale);

int hs = std::ceil(width*scale);

// wrap image and normalization using INTER_AREA method

cv::resize(sample_single, resized, cv::Size(ws, hs), 0, 0, cv::INTER_AREA);

resized.convertTo(resized, CV_32FC3, 0.0078125, -127.5*0.0078125);

// input data

input_layer->Reshape(1, 3, hs, ws);

PNet_->Reshape();

std::vector<cv::Mat> input_channels;

WrapInputLayer(&input_channels, PNet_->input_blobs()[0], hs, ws);

cv::split(resized, input_channels);

// check data transform right

CHECK(reinterpret_cast<float*>(input_channels.at(0).data) == PNet_->input_blobs()[0]->cpu_data())

<< "Input channels are not wrapping the input layer of the network.";

PNet_->Forward();

// return result

Blob<float>* reg = PNet_->output_blobs()[0];

//const float* reg_data = reg->cpu_data();

Blob<float>* confidence = PNet_->output_blobs()[1];

GenerateBoundingBox(confidence, reg, scale, threshold[0], ws, hs);

std::vector<FaceInfo> bboxes_nms = NonMaximumSuppression(condidate_rects_, 0.5, 'u');

total_boxes_.insert(total_boxes_.end(), bboxes_nms.begin(), bboxes_nms.end());

}

int numBox = total_boxes_.size();

if (numBox != 0) {

total_boxes_ = NonMaximumSuppression(total_boxes_, 0.7, 'u');

regressed_rects_ = BoxRegress(total_boxes_, 1);

total_boxes_.clear();

Bbox2Square(regressed_rects_);

Padding(width, height);

/// Second stage

ClassifyFace_MulImage(regressed_rects_, sample_single, RNet_, threshold[1], 'r');

condidate_rects_ = NonMaximumSuppression(condidate_rects_, 0.7, 'u');

regressed_rects_ = BoxRegress(condidate_rects_, 2);

Bbox2Square(regressed_rects_);

Padding(width, height);

/// three stage

numBox = regressed_rects_.size();

if (numBox != 0) {

ClassifyFace_MulImage(regressed_rects_, sample_single, ONet_, threshold[2], 'o');

regressed_rects_ = BoxRegress(condidate_rects_, 3);

faceInfo = NonMaximumSuppression(regressed_rects_, 0.7, 'm');

}

}

regressed_pading_.clear();

regressed_rects_.clear();

condidate_rects_.clear();

}

int main(int argc, char **argv)

{

::google::InitGoogleLogging(argv[0]);

double threshold[3] = { 0.6, 0.7, 0.5 };

double factor = 0.709;

int minSize = 50;

string proto_model_dir = "model";

MTCNN detector(proto_model_dir);

#if FROM_VIDEO

cv::VideoCapture cap(0);

#else

string imgname = "test.jpg";

#endif

cv::Mat frame;

#if FROM_VIDEO

while (cap.read(frame)) {

#else

string imageName = imgname;

frame = cv::imread(imageName);

#endif

clock_t t1 = clock();

std::vector<FaceInfo> faceInfo;

detector.Detect(frame, faceInfo, minSize, threshold, factor);

std::cout << "Detect " << frame.rows << "X" << frame.cols << " Time Using GPU-CUDNN: " << (clock() - t1)*1.0 / 1000 << std::endl;

for (int i = 0; i < faceInfo.size(); i++) {

float x = faceInfo[i].bbox.x1;

float y = faceInfo[i].bbox.y1;

float h = faceInfo[i].bbox.x2 - faceInfo[i].bbox.x1 + 1;

float w = faceInfo[i].bbox.y2 - faceInfo[i].bbox.y1 + 1;

cv::rectangle(frame, cv::Rect(y, x, w, h), cv::Scalar(255, 0, 0), 2);

}

for (int i = 0; i < faceInfo.size(); i++) {

FacePts facePts = faceInfo[i].facePts;

for (int j = 0; j < 5; j++)

cv::circle(frame, cv::Point(facePts.y[j], facePts.x[j]), 3, cv::Scalar(0, 0, 255), 3);

}

cv::imshow("img", frame);

#if FROM_VIDEO

if ((char)cv::waitKey(1) == 'q')

break;

}

#else

string resultpath = resultdir + "/" + imgname;

cv::imwrite(resultpath, frame);

cv::waitKey();

#endif

return 0;

}